Luke. han, co-founder & CEO of Kyligence and creator of Apache Kylin, a top Apache open-source project, shares his thoughts on the impact of AI on the open-source space. Han, the creator of Apache Kylin, will share his in-depth thoughts on the impact of AI on the open-source field, which covers the commercialization path of open-source projects, the impact of technological changes on the industry landscape, and the innovation of AI on data analysis and business decision-making paradigm, reflecting that the big data and analytics industry is undergoing a profound change, calling for practitioners to actively respond and innovate.

Apache Kylin graduated in 2015, and Kyligence was founded in 2016. In the past few years, we have accumulated a lot of practice and summarization by iterating and updating our technology to adapt to new technology trends.

On the occasion of the New Year, I would like to take this opportunity to share with you some of our reflections, introduce our observations and reflections on relevant trends, and some of our perceptions of the future. I hope that together, we can contribute to the change in this industry.

Open source can’t make big money

This is a topic I hate to bring up, but it’s true.

From a business perspective, open source is not a business model, it’s just a marketing tool. And commercially, without effective commercialization means, open source users won’t be converted into paying users, which can be deeply felt from the practice of many friends in the industry.

Many people don’t know us well enough to think that we are letting users use open-source Kylin first and then convert to the commercial version, and this misunderstanding has been going on for many years. Apache Kylin had accomplished several important community efforts by the time we left eBay:

Graduated to a top ASF program, building brand and awareness

It was used on a large scale by several major Internet vendors, including Baidu, NetEase, Headline, Meituan, etc., which honed its technical maturity.

Built community and influence as China’s first ASF top-tier open-source project.

Therefore, when we founded Kyligence, we started commercialization, and almost all of our customers talked directly about the enterprise version and went for commercial cooperation from the very beginning, which is why so many of our head customers have continued to work with us for many years. Today, we have accumulated so many enterprise customers, only a very few of them are using Apache Kylin and then converted to the commercial version, especially banks and other financial customers, the beginning of the enterprise features, security, resource management, and services, such as demanding requirements, which are also the design goals of the enterprise version. Customers never want just the technology, but the product design, service assurance, and continuous innovation behind the technology.

Business is business, and all of us must have a deep understanding of why customers pay and why they are willing to pay us so much money. The underlying technology breakthrough is difficult, but often in the product, the real money is not the most profound technology, we just need to improve some user experience, and change some process flow, as long as it can help users save labor, cost, improve efficiency, customers are willing to pay – each technology point, have to design the value proposition to customers, and Not just claim that the technology is great, must be recognized by the customer. This requires us to go deeper into the customer site, more to understand the actual needs of customers, pain points, and itch points.

Of course, we do not deny the value of open source. We have benefited greatly from open-source technologies and communities, so we will continue to invest in, participate in, and continue to lead various open-source projects.

Hadoop is dead

Hadoop, as the representative of Big Data, used to be very popular and had a huge market opportunity. Unfortunately, in 2021, with the sale of MapR and privatization of Cloudera, Hadoop is dead. There are too many reasons for this, but from my perspective, the main reason is the split in the community and the conservative business strategy.

In 2017, Doug Cutting (the father of Hadoop) thought about how the Hadoop ecosystem would be in the next ten years on the occasion of Hadoop’s tenth anniversary. Less than 5 years later, the industry is hardly talking about Hadoop-related technologies anymore – there are only minor modifications and no stunning projects have emerged.

In the first five years of our startup, we were fortunate to follow the expansion of big data and data lakes, when banks were migrating their MPP data warehouse-based applications to Hadoop-based big data platforms. However, with the decline of Hadoop vendors, we can feel the rapid changes in the market, and with the rise of cloud computing, cloud data warehouses, cloud data lakes, and very quickly appearing in the market, “data warehousing” technology schools gradually split. The domestic situation is even worse, breeding a variety of customized Hadoop, and magic private cloud, making the market very complex, but it is difficult to make excessive profits.

In 2021, the leader of a bank contacted us to give a lecture, directly admitted that “Hadoop is over“, and asked us to discuss with their architecture team how the big data platform should go after Hadoop, and how to migrate the existing architectures, applications, etc. At that time, we were very sensitive to the fact that Hadoop was the most important platform in the world. At that time, we were very sensitive to the fact that we had to iterate and transform faster.

Over the past two years or so, we can feel that Hadoop-based platforms are slowing down their construction, with part of them reverting to MPP (based on data warehouses, with big data/data lakes supporting part of the business), and part of them moving towards cloud-native architectures (based on data lakes, moving towards lake warehouses as a whole). I predict that this complex hybrid architecture should persist for at least 5+ years in the future.

BI will be evolved

Modern BI tools are almost always visualization tools, and the reason why they need such strong visualization is that humans can’t understand the data directly, giving people 0 and 1 is not directly interpreted and understood. Graphics can help humans quickly understand, whether a certain indicator is up or down, a factor that another to have a greater impact, which results indicator is decomposed by several process indicators, and so on. Excellent visualization capabilities are a powerful tool to help analysts efficiently complete analysis, summary, and exploration.

But today, when AI can directly read and analyze data, there is no need to front a visualization tool. Directly to the AI data 0 and 1, you can let the AI quickly give the conclusion of the analysis: is it up or down? Why is it behind? What factors influence it? How big is the impact? What other reasons ……AI generated efficiency gains, is ten times to more than a hundred times. It is equivalent to AI doing most of the work that analysts used to need to do, and humans only need to make choices, judgments, and slight corrections. This is the first point that the AI era has brought great changes to the data and analytics industry.

There’s a great analogy in the automation industry: don’t let the robots think. Now that AI is available, humans can ask AI for results and recommendations, rather than still letting AI do the inefficient work.

Our product today already has all these capabilities and we can go one step further to change the industry. Provide users with a dynamic, smart, and efficient Decision Assistant/Copilot, not just a data presentation tool.

Performance is not the key, Performance will be the new key

One of the challenges we often encounter in OLAP scenarios is Performance.

While performance is our strong suit, we often go to great lengths to help our customers speed up a SQL by a few seconds, or to make them wait a few seconds less when they click on a dashboard.

Performance is also one of the most common and competitive points in the technology PK. A well-performing system/OLAP is, of course, very good, but when everyone is down to seconds, there’s nothing comparable. And often changing a data structure, or a data Pipeline, can improve performance by orders of magnitude.

So why do you need such good performance? Does performance still matter in the age of AI? We believe that the reason why you need great performance on the OLAP or data warehouse side is that a lot of the data analysis work relies on a limited number of analysts or business users, and this group of people is under a lot of business pressure, and often the data comes out of a very heavy task, such as comparing the data, reviewing the history, analyzing the causes, rerunning the possibilities, etc., and forming reports and making decisions, Recommendations for action. So a very good tool is essential.

But in the age of AI, a lot of work here can be entrusted to AI to complete, or even let AI in advance to complete, especially the inherent, commonly used analysis routines.AI can quickly give a general summary, which has been able to greatly save manpower.AI can make all kinds of automated system connection.

Today, we use Kyligence Zen to produce a weekly report or do an attribution, it only takes about ten seconds, and it comes with a summary, which is a qualitative improvement over the traditional process: produce results, check the data, write a report ……. Performance, especially query performance, in this scenario has been less stringent. Instead, concurrency becomes the next challenge as more and more people come to use the system. Concurrency happens to be our strength.

Recently, more customers have shifted their focus from performance to another meaning of Performance: performance.

A metrics platform is essentially a KPI platform, and KPI stands for Key Performance Indicator. When we shifted our focus from performance to performance, we suddenly realized that this is what customers want: dashboards or reports are never the result, they want data-based management capabilities. Almost every useful indicator/metric shows a business or management result, and the reason why a company needs metrics is to better observe the progress of the relevant business, the state of health, and to take timely measures to modify the organization’s behavior, to ensure that the company’s overall or part of the company’s performance can be by the set goals.

We need to think beyond technology to differentiate ourselves in terms of Performance, increase the ROI of our technology investments, and quickly capture the market.

AI is eating the world

AI is cannibalizing software, something NVIDIA’s Jen-Hsun Huang mentioned in a 2017 interview, and by today, no one should doubt that assertion. The question now is how AI will change software in different areas, and in our industry, it’s how AI will change the data and analytics market.

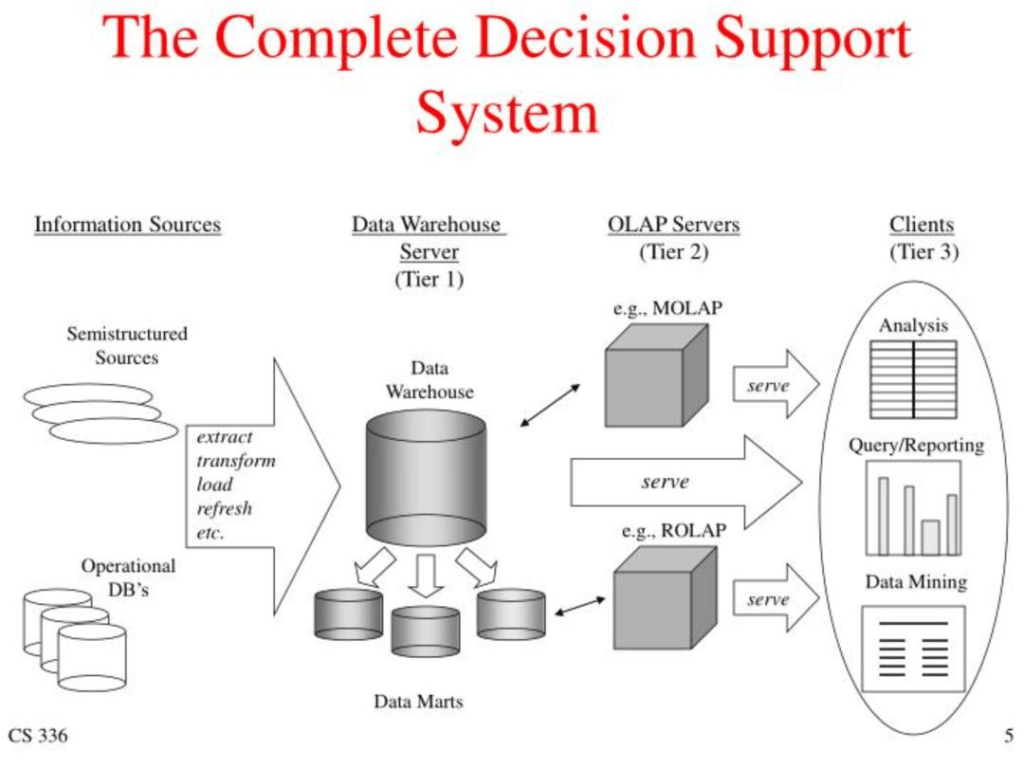

In the digital warehouse world, the above diagram has been used for more than 30 years: “Data Sources” – “ETL” – “Data Warehouses” – “OLAP/Data Marts” – “BI/Reporting” topped with “OLAP/Data Mart – BI/Reporting, topped off with ” Metadata” and “Analytics Forecasting”.

Whether ELT or ETL, data lake or data warehouse, local deployment or cloud deployment, all of which assume that the data needs to go through a long engineering process, from the raw data extraction, organized into a star or snowflake model, to provide to the upper layers of BI and so on. The end user, as a user, is often the last to be empowered, resulting in a large amount of data that is still underutilized today.

The emergence of Generative AI (Generative AI) has led to a dramatic change in the process of data, revolutionizing the way data is processed. First of all, all kinds of complex and repetitive labor, especially the Pipeline of data, will be handled by AI agents. From small row and column conversion to large data governance, there should be a lot of AI Agents to handle these jobs in the future, and human beings only need to design a reasonable process with cue words. This can be seen in ChatGPT’s own Advanced Data Analysis and many data analysis tools that use OpenAI’s Code Interpreter, and even GPTs can do a lot of data combing and analyzing work with a few simple prompt words.

I wrote about it in an internal document at the time:

If you don’t have AI, you’re going to be replaced by an advanced AI

Secondly, human-machine interaction has evolved to the most natural way and the way data is consumed has been revolutionized. As long as you can talk, you can use data, which is a huge change brought by AI this time. This means the original leaders, analysts, and professional users can only use the “data and analytical capabilities”, all of a sudden common people to everyone, even if the cultural level of the user is limited, can also be fully empowered. This will dramatically change the way data is structured, processed, and consumed.

Most companies now have no more than 10 ~ 15% of employees who can effectively use data, and this AI revolution will enable the remaining 85 ~ 90% to directly consume data or data products, which can be predicted to be unsatisfactory for the existing data architecture. The change here has just begun, our practice at the forefront of the industry, recently received a lot of feedback from the market, said that our products do very pragmatic and good use, and even a head of the joint-stock banks and I talked about whether to provide them with a product consulting to teach them how to do the product – this shows that our design, experience and functionality, to get the end-user acceptance and are influencing the industry.

When everyone can and needs to consume data, is the traditional data warehouse or data lake architecture still applicable, and what kind of changes will happen to the way data is stored? This is an open question, and I don’t have a concrete answer at this point, but it is foreseeable that AI scenarios will inevitably require systems to process more data, access it more flexibly, and serve more people more efficiently. From the architecture of most of today’s MPP and big data, these aspects should soon face a huge challenge, when the access is ten times larger than now, a hundred times or even ten thousand times, today any data system to be completed in a cost-controlled situation is very difficult, and here we look forward to the future and customers to work together to study and explore and work together to break through the limits of the place.

AI can bring, will be much more than these. AI will bring a deep revolution in data and analysis. Machines will replace humans to do more work, especially the repetitive, and can be automated. In the past, after the data system completed the processing of data and statistics of indicators, a large number of human beings were needed to analyze the influence of the elements that affect the change of the relevant indicators, explore the root causes, and provide relevant decision-making recommendations based on experience.

Simple practice can see huge changes, and the potential here is unlimited. I hope everyone can use their imagination to let AI change data and analysis, and even the operation and management of the whole enterprise.