OpenAI founder Altman has not even had time to finish breaking up the new feud with Musk, it does not matter, there are others who will quietly strike –

March 4, Anthropic, without warning released the latest generation of large models Claude 3, from the previous generation of Claude 2 release, only eight months apart.

Anthropic is a strong enemy of OpenAI, and its core team is also the original OpenAI team. The team split up due to philosophical differences with OpenAI and formed a new company, Anthropic, in 2021.

In 2023 alone, Anthropic received five consecutive rounds of funding totaling $7.3 billion. Anthropic is arguably the first tier in the industry when it comes to big model training, and is also getting a lot of attention from Silicon Valley.

Comprehensively surpasses GPT-4

The new generation of Claude 3 is divided into three versions, Haiku, Sonnet and Opus models. In terms of model size, it can be interpreted as the model’s medium, large, and extra-large cups.

-

Haiku: the fastest model accordingly, and the lowest cost option, still performs quite well on most text-only tasks, and also includes both multimodal capabilities (e.g., vision)

-

Sonnet: for scenarios where performance and cost need to be balanced, it performs comparably to Opus behind it on plain text tasks, but is more economical in terms of cost, and is suitable for businesses and individual users who need slightly better performance but have a limited budget

-

Opus: powerful reasoning, math and coding capabilities that approach human comprehension for scenarios that require a high degree of intelligence and complex task processing, such as enterprise automation, complex financial forecasting, research and development, and more.

While OpenAI has made a nice turnaround in the literate video space with Sora, the video track is in a much earlier stage of development. The main battleground today is still on Large Language Models (LLMs), which are also much closer to productization.

Claude 3 has just been released, sit back and wait for OpenAI’s well-orchestrated GPT-5 release in a few hours.

The year 2024 is just two months old, and we have already witnessed Google Gemini Pro, OpenAI’s Sora, and now the Claude release …… Giant melee has once again kicked off, with a tendency to get more and more intense.

Read 150,000 words in one breath, and can also disassemble complex problems by itself

If OpenAI is the big model field “hexagonal warrior”, whether it is model video, commercialization of the integrated level of riding in the dust, then Anthropic from the style of more low-key, but also more emphasis on expertise, but this time the ability to improve is really huge.

Claude 3 ended the GPT-4 era.

The two major highlights of this Claude 3 update are long text and multimodal capabilities.

Long text is one of Anthropic’s significant advantages, which is reflected in the fact that Anthropic is better at comprehending and answering the user’s relevant questions when reading texts with more words, such as essays and novels.

This time around, Claude 3 has significantly increased the contextual dialog window to 200k – which can be interpreted as the amount of text that can be entered in a single conversation with the model.

Specifically, Claude 3’s 200k conversation length equates to being able to process more than 150,000 English words in a single session, compared to the GPT-4 Turbo’s 128k context window, which is about 96,000 English words.

And, for the first time, Claude 3 also allows image and document uploads. Like ChatGPT, Claude 3 “recognizes” what’s in the picture, describes it directly, and answers the user’s questions.

Even more impressive, Claude 3 is now able to analyze complex problems in the same way as a human being, by first disassembling the problem and handing it over to a submodel for scheduling.

-

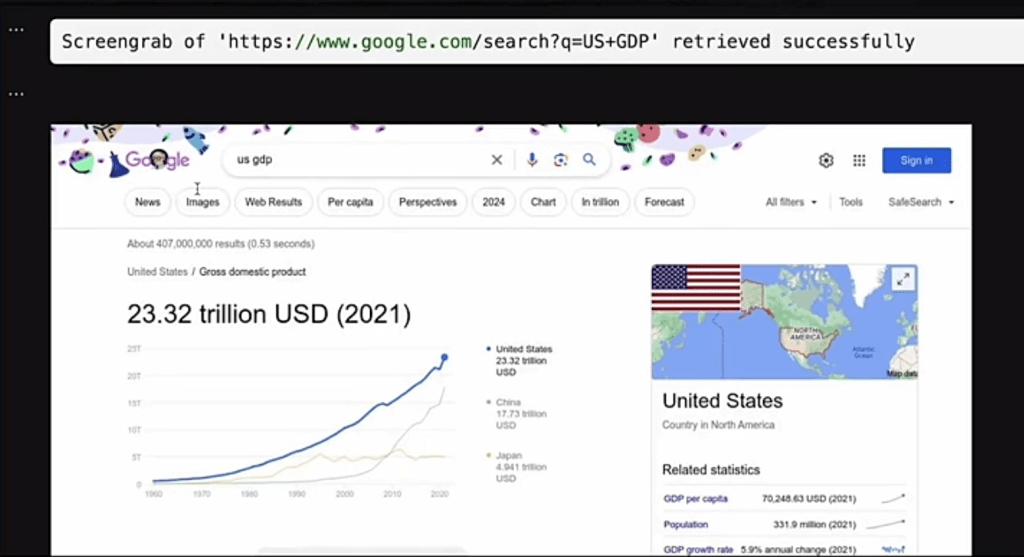

First, he opened a tool called “webview” and jumped to a web site related to the problem.

-

Because of his multimodal capabilities, he was able to pull down the information he “saw”, whether it was text or graphs, and use it to solve the problem.

-

Then you can write your own python program and render the trend graphs for humans to see if they are correct.

The most interesting thing is that if you see a graph of data on a web page, and there is no explicit data, Claude 3 can even estimate what the data is at each stage by recognizing the image, and make a reduction.

AI security is also a point of difference between Anthropic and OpenAI. When the OpenAI and Anthropic teams split up, the most important difference was the inclusion of AI safety considerations, with Anthropic wanting to build a “more trustworthy” model, while OpenAI clearly wanted to move the model forward faster and more aggressively with a commercialization-driven approach.

In response, Anthropic has taken steps that include, but are not limited to, developing a framework for evaluating and mitigating the potentially catastrophic risks of AI models, such as continuing to automate evaluations and red-team tests to ensure that AI does not develop capabilities that could cause harm.

In April 2023, Anthropic caused a stir in circles by even open-sourcing Constitutional AI, an AI that restricts a model’s behavior to adhere to specific constitutional principles.

As well as the fact that 2024 is also a US election year, Anthropic is also preparing to develop and implement policies on the use of the tool in political and electoral contexts, evaluating the model’s response to electoral misinformation, bias, and other abuses, and ensuring that users have access to accurate and up-to-date polling information in selected countries.

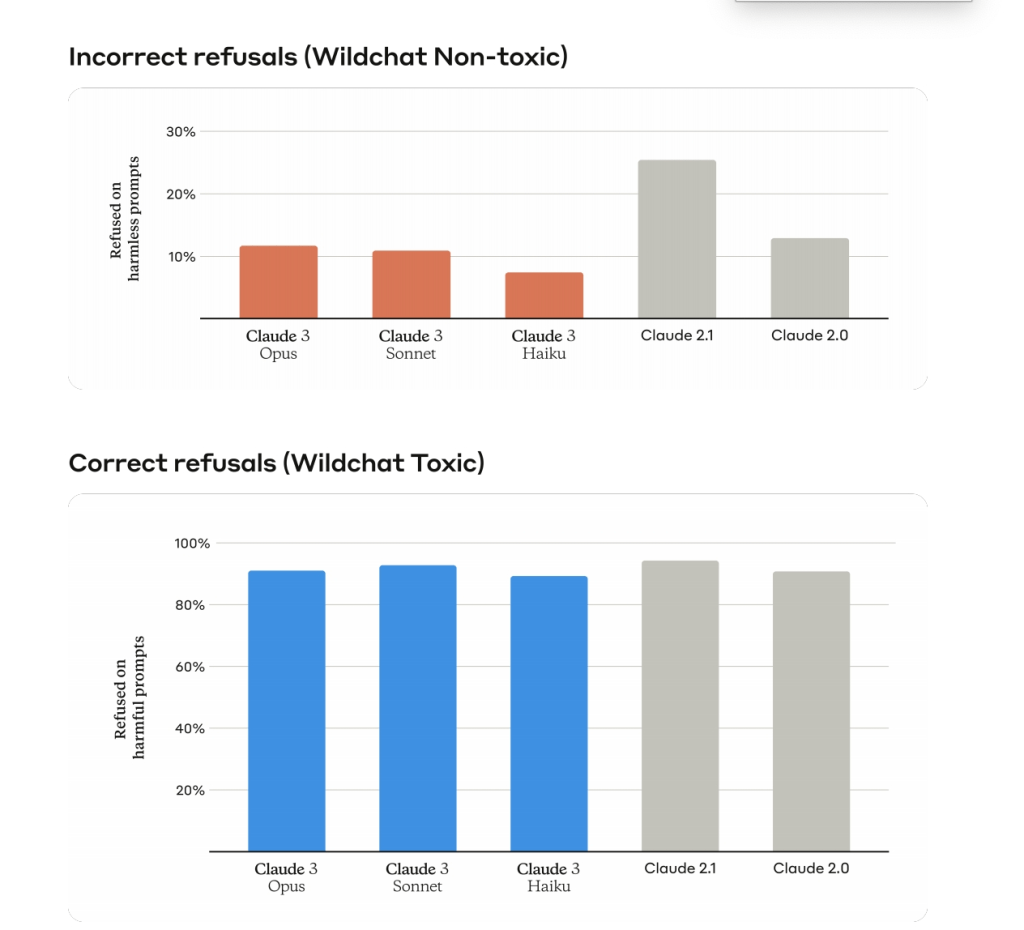

However, Anthropic has also been known to take AI security too seriously, being cautious in its assessment of many questions or even outright not answering them, causing a sea of user trolls. This time, Anthropic says that Claude 3 has greatly improved on this issue.

For example, Claude 3’s rejection response rate for harmless messages generally came to nearly 10%, an improvement over both Claude 2.1 and 2.0.

According to CNBC, Anthropic declined to say exactly how much time and money it took to train Claude 3, but said that reputable SaaS companies such as Airtable and Asana had help with A/B testing the model, allowing for improved controllability as well.

Beyond technology, Anthropic commercialization needs to pick up speed again

Despite Anthropic’s technological prowess, the large modeling space has now gone through more than a year of technological competition. How to move from the model to the actual product landing, to create greater commercial value, is the sword hanging over the heads of all manufacturers.

Today Anthropic stands behind Google Cloud, and Amazon, and has formed a sharp camp contrast with OpenAI (backed by Microsoft). But from the commercialization point of view, the current Anthropic is far from catching up with OpenAI, which means that Anthropic will face more pressure in 2024.

Like ChatGPT, Anthropic is also To B and To C two legs. On the enterprise side, Anthropic already serves users including Slack, Notion, and Quora.

As reported by The Information in December 2023, Anthropic is expected to generate more than $850 million in annualized revenue by the end of 2024. By comparison, also by the end of 2023, OpenAI’s annualized revenue increased to $1.6 billion from just $1.3 billion in mid-October, thanks to strong growth at ChatGPT.

Going forward, the gap is likely to grow as OpenAI commercialization picks up speed, with The Information citing several OpenAI leaders who, by the end of 2024, expect OpenAI to reach $5 billion in annualized revenues, while others believe it could reach a much higher figure.

The technological arms race is far from over. In December 2023, Anthropic was still in the midst of a new funding round of up to $750 million at a valuation of $18.4 billion, a 4.5-fold increase from its $4.1 billion valuation at the beginning of 2023