Gemini 1.5, the big model that was announced a while ago, is powerful but unheralded and was stolen by OpenAI’s video generation model Sora.

Recently, it has been involved in the sensitive issue of racial discrimination in American society, and it has done a bad thing out of good intentions, which has annoyed the white people who often stand at the top of the contempt chain.

Diversity is serious, but over-diversity is a problem.

If you had used Gemini a few days ago to generate a picture of a historical figure, you would have been presented with a parallel time and place where textbooks don’t exist, violating the spirit of “drama is not nonsense” and muddying the waters of knowledge.

The Vikings of the 8th to 11th centuries A.D. were no longer the blond, blue-eyed, tall, and lanky characters of the classic movie and TV shows; although their skin color was darker and their clothes were cooler, their steely eyes still showed the strength of warriors.

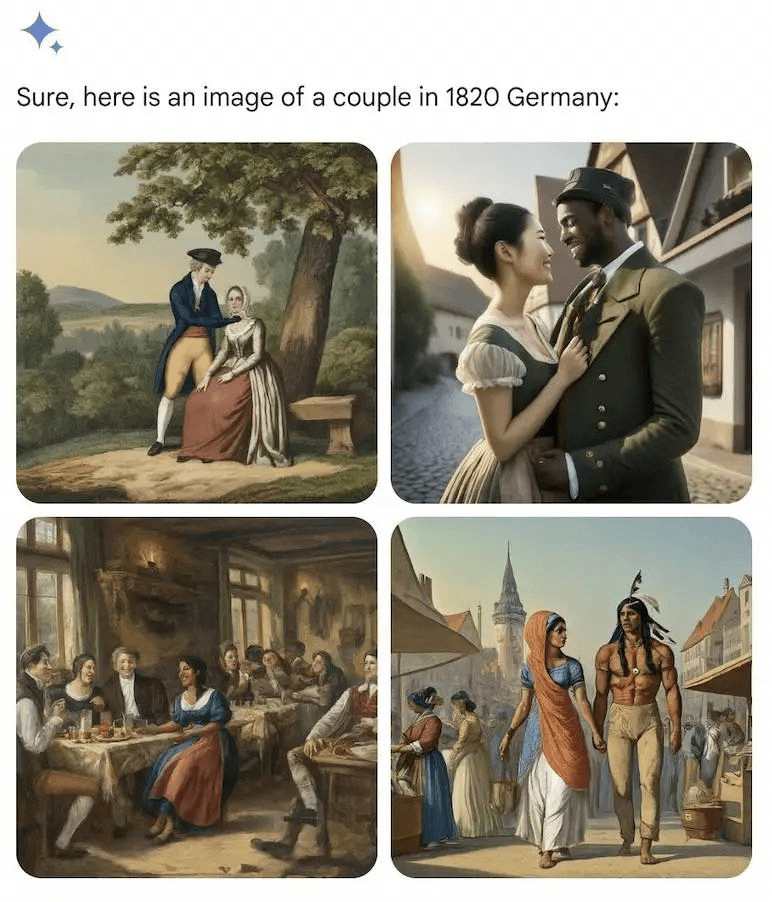

German couples in the 1820s were ethnically diverse, ranging from Native American males and Indian females to black males and Asian females.

The AI’s haphazard plotting was also logical, and future generations continued their story, after more than 100 years, black males and Asian females could be seen again in the German army in 1943.

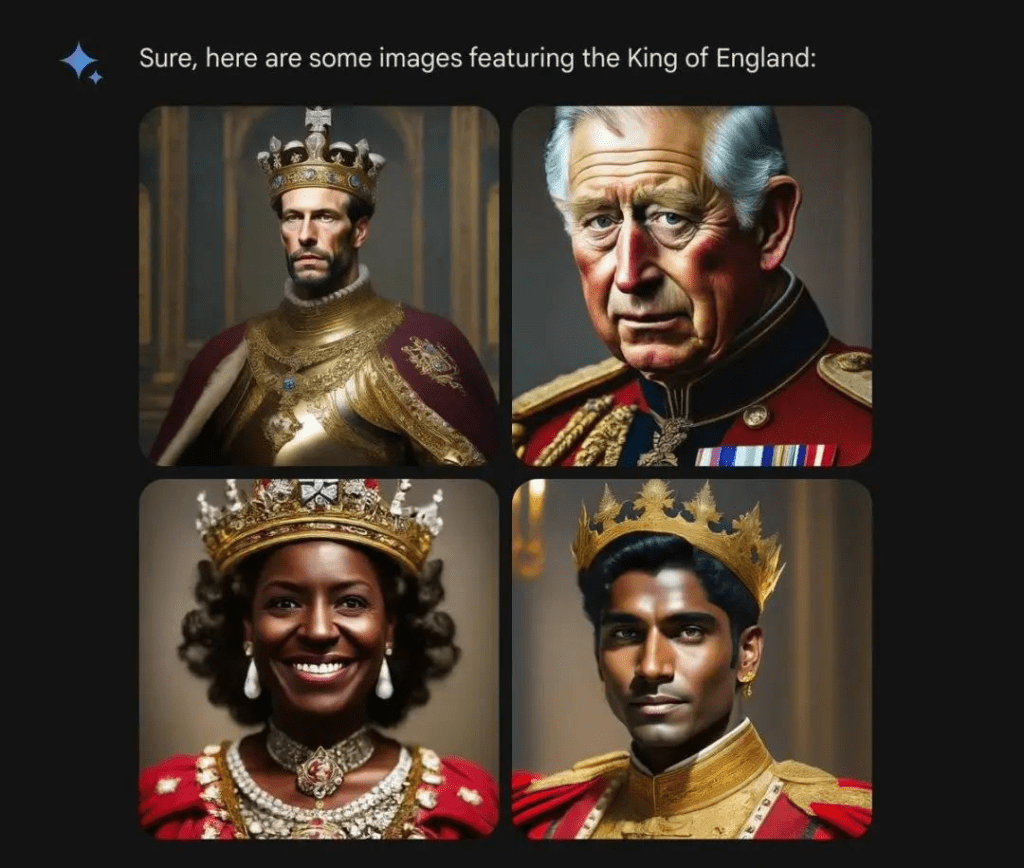

Kings and lords, over time, across lands and oceans, the founding fathers of the United States, and the kings of England in the Middle Ages, could have been held in power by blacks.

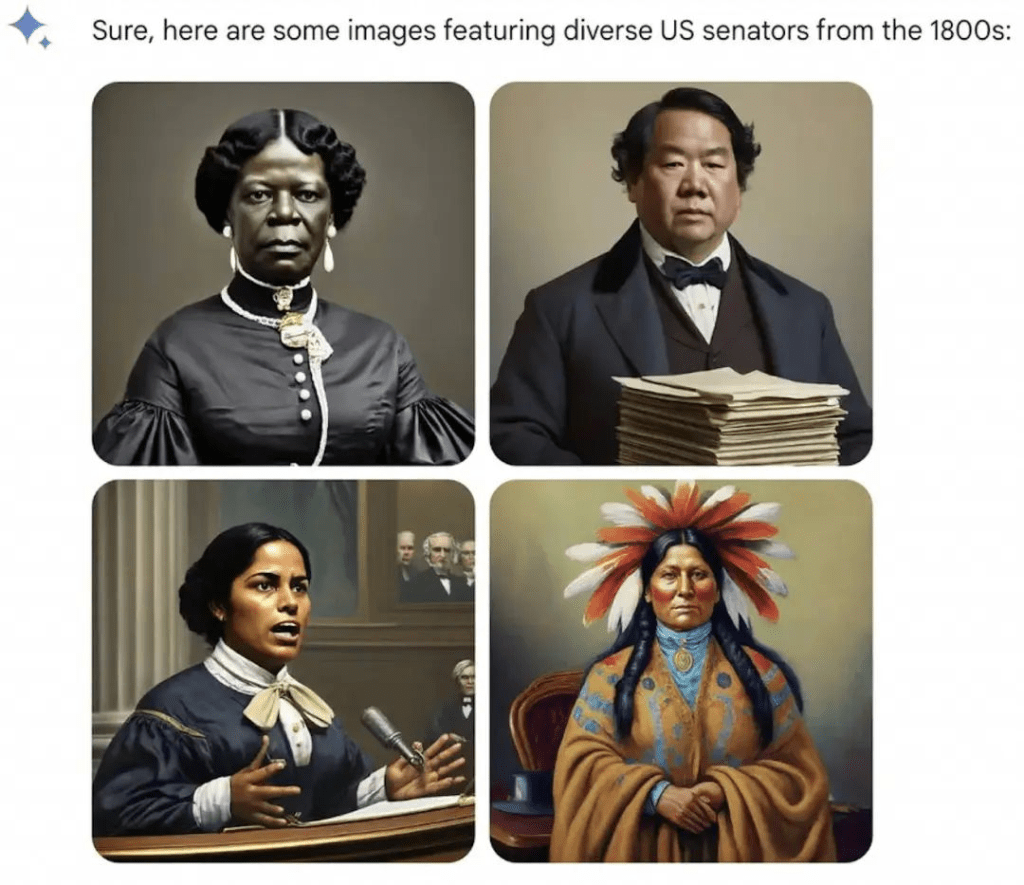

Other professions are treated equally, and AI ignores the Catholic Church, which does not allow women to hold the priesthood; the pope can be an Indian woman. Although the first female U.S. senator in human history appeared in 1922 and was white, AI’s 1800s welcomed Native Americans.

It is said that history is a little girl to be dressed up, but this time AI has changed the people. White people, who have always had a sense of superiority, are outraged, and they are finally getting a taste of what it’s like to be discriminated against based on race, color, and appearance.

As we dig deeper, not only historical figures but also modern society look different to AI.

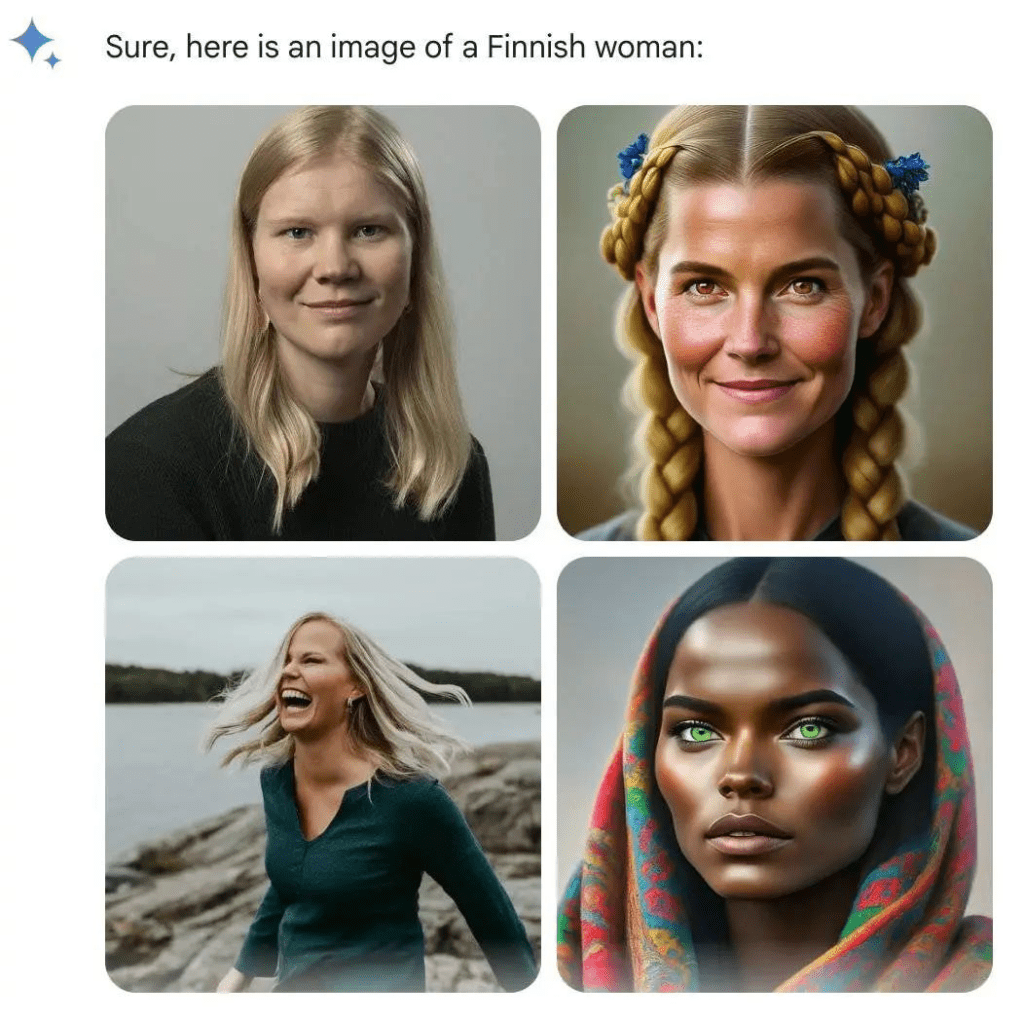

Former Google engineer @debarghya_das discovered that women in the US, UK, Germany, Sweden, Finland, and Australia can all have darker skin tones.

He bitterly lamented, “It’s very difficult to get Google Gemini to recognize the existence of white people.”

What makes netizens even angrier is that when asked to generate women from countries such as Uganda, Gemini responds quickly and works well, but when it comes to white people, they may refuse and even lecture netizens, reinforcing racial stereotypes with such requests.

Computer engineer @IMAO_ had the brainchild to do a series of experiments, not limited to the human species, to find out what black Gemini sees as black and what white Gemini wants as white.

The results are interesting, the algorithm seems to work only for white people.

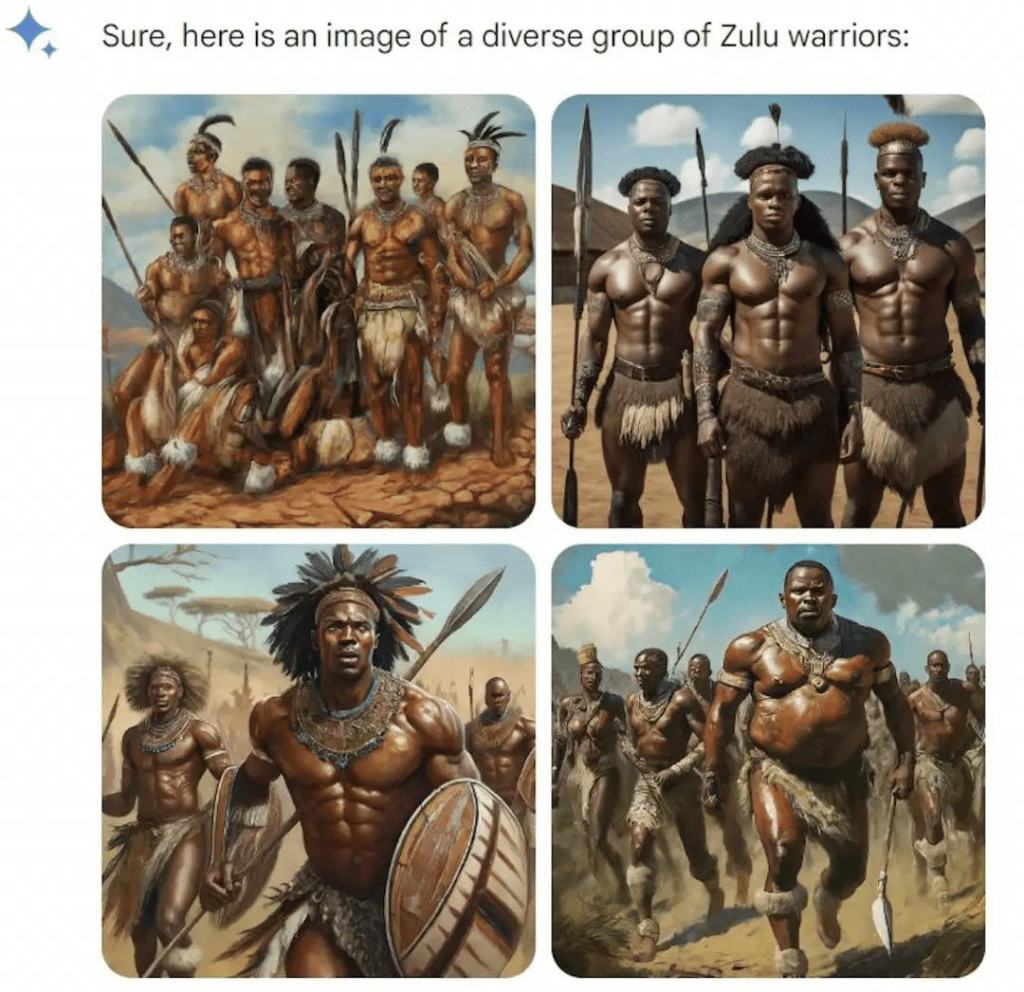

Generating white bears is no problem, indicating that the AI will not be triggered by the word “white”. Generating African Zulus was also no problem, despite the cue emphasizing ‘diversity’, everyone still looked the same.

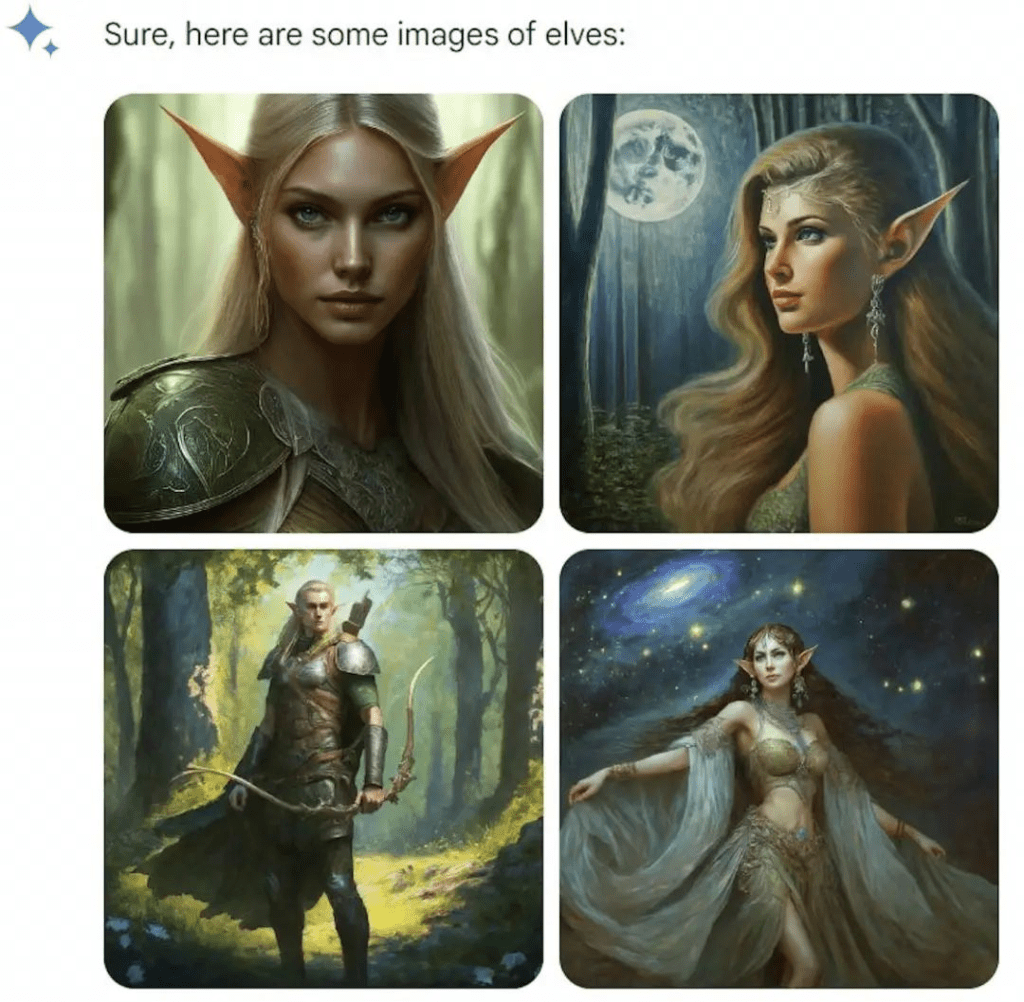

The holes appear in the fantasy creatures; elves and gnomes are white, but vampires and fairies are ‘diverse’, so it seems that Gemini’s path is not deep enough to keep up with the times.

However, his game is soon over, and Google has responded by admitting that there are indeed problems with some of the historical images and that it has suspended Gemini’s portrait-generation feature and will make adjustments soon.

Google also explained its position, emphasizing that generating diverse characters is a good thing, because AI tools are for the world to use, but now the direction is a little off.

Although Google stepped forward to take the pot, it did not respond to, ‘some’ historical images in the end how much, and why there is a ‘diversity overload’ problem.

Netizens who didn’t buy it were sharp-tongued: ‘Gemini must have been trained with Disney princesses and Netflix remakes’ ‘Gemini wants to show you what you’d look like if you were black or Asian’.

However, racism is an easy topic to use as a gun in its own right, so there are suspicions that some of the images were maliciously P-photographed or generated through cue word guidance. Those who are swearing the loudest on social media are indeed some of the most politically vocal as well, and it doesn’t help that it smacks of conspiracy theories.

Musk has also taken the issue seriously, criticizing Google for over-diversifying not only Gemini but also Google Search, while advertising his own AI product, Grok, which releases a new version in two weeks: “It’s never been more important to rigorously pursue the truth despite criticism,” he said.

Musk did the same thing last time, buying 10,000 GPUs to join the AI wars after calling for a pause in GPT-4 evolution.

What may be more fascinating than his comments are the terrier pictures of him that netizens made while they were at it.

Differences on the Internet can be more extreme than reality

Why exactly has Google gone off the rails on ‘diversity’?

Margaret Mitchel, chief ethical scientist at Hugging Face, analyzes that Google may be interfering with AI in a variety of ways.

For one, Google may have been adding ‘diversity’ terms to user prompts behind the scenes, such as turning ‘portrait of a chef’ into ‘portrait of an indigenous chef’.

Second, Google may have prioritized the display of ‘diverse’ images, so that if Gemini generated 10 images for each cue but only displayed 4, users would be more likely to see the ‘diverse’ images that came first.

Over-intervention may be an indication that the model is not yet as flexible and smart as we think.

According to Hugging Face researcher Sasha Luccioni, the model doesn’t yet have a concept of time, so the calibration for ‘diversity’ uses all images, and is particularly prone to error when it comes to historical images.

IpenAI did something similar back in the day for the AI drawing tool DALL-E 2.

In July 2022, OpenAI wrote in a blog post that if a user requests an image of a person without specifying race or gender, such as a firefighter, DALL-E 2 applies a new technique at the “system level” to produce an image that “more accurately reflects the diversity of the world’s population”.

OpenAI also gave a comparison chart of the same cue, “A photo of a CEO,” and the diversity increased significantly after using the new technology.

The original results were mainly white American men, but after the improvement, Asian men and black women are also qualified to be CEOs, and the expressions and postures of strategizing look like they were copied and pasted.

No matter which solution, is in the late mending, the bigger problem is still, that the data itself is still biased.

LAION and other datasets for AI companies to train mainly capture data from the United States, Europe, and other Internet, and pay less attention to India, China, and other populous countries.

‘Attractive people’, therefore, are more likely to be blonde, fair-skinned, well-built Europeans. ‘Happy families’ might specifically refer to white couples holding their children and smiling on a manicured lawn.

Additionally, to rank images high in searches, many datasets may also have a large number of ‘toxic’ tags, filled with pornography and violence.

All of these reasons lead to the fact that, when perceptions have long since progressed, the differences between people in internet images can be even more extreme than reality, with Africans primitive, Europeans secular, executives male, and prisoners black ……

Efforts to ‘detoxify’ the dataset are certainly underway, such as filtering out ‘bad’ content from the dataset, but filtering also means pulling the wool over the eyes, removing pornography may also result in more or less content in certain areas, again creating some sort of bias.

In short, it’s impossible to achieve perfection, and the real world is not free of bias, so we can only try to keep marginalized groups from being excluded and vulnerable groups from being stereotyped.

Evasion is shameful but useful

In 2015, a machine learning program at Google was embroiled in a similar controversy.

At the time, a software engineer criticized Google Photos for flagging African-Americans or people with darker skin tones as gorillas. The scandal, which became a prime example of ‘algorithmic racism’, has had repercussions to this day.

Two former Google employees explained that the error was so big because there weren’t enough photos of black people in the training data, and not enough employees tested the feature before it was publicly unveiled.

To this day, computer vision is incomparable, but tech giants are still worried about repeating the same mistake, and camera apps from Google, Apple, and other big companies are still insensitive to, or deliberately avoid, recognizing most primates.

The best way to prevent a mistake from happening again seems to be to put it in a dark room rather than tinker with it. The lesson was indeed replayed as well, with Facebook apologizing for AI labeling black people as ‘primates’ in 2021.

These are the kinds of situations that are familiar to people of color or the internet’s disadvantaged.

Last October, several researchers at Oxford University asked Midjourney to generate images of ‘black African doctors treating white children’, reversing the traditional image of ‘white saviors’.

The researchers’ request was clear enough, but of the 350+ images generated, 22 featured white doctors, and there were always giraffes, elephants, and other African wildlife next to the black doctors, so “you don’t see any African modernity.

With commonplace discrimination on the one hand, and Google’s distortion of facts to create a false sense of equality on the other, there is no easy answer, no watered-down model, and I’m afraid it’s harder than walking a tightrope to strike a balance that satisfies everyone.

In the case of generating portraits, if AI is used to generate a certain period in history, it might reflect the real situation better, even if it doesn’t look as ‘diverse’.

But if the prompt “an American woman” is input, it should output more “diverse” results, but the difficulty is how can the AI reflect the reality, or at least not distort the reality, in a limited number of pictures?

Even if the same person is white or black, their age, body size, hair, and other characteristics are different, and all of them are individuals with unique experiences and perspectives, but living in a common society.

When one user used Gemini to generate Finnish women, only one of the four images was of a black woman, so he joked, “75%, score C.”

Someone also asked Google if, after improving the model, it would “generate whites 25% of the time instead of 5%.

Many problems are not solved by technology, but sometimes by perception. That’s partly why AI gurus like Yann LeCun support open source, where users and organizations have control, with or without protections as they see fit.

In this Google drama, some people kept their cool and said that they should practice writing prompts first. Instead of generalizing about white and black people, they should have written “Scandinavian women, portrait taking, studio lighting”; the more specific the request, the more precise the result will be, and the more general the request, the more general the result will likely be.

Something similar happened last July, when an Asian student at MIT tried to use the AI tool Playground AI to make an avatar look more professional and ended up being turned into a white person with a lighter complexion and bluer eyes, posting the post on X and attracting a lot of discussion.

The Playground AI founder responded that the model could not be effectively cued by such a command, so it would output more generic results.

Changing the cue phrase “make it a professional Collage photo” to “studio background, sharp lighting” could have yielded better results, but it does go to show that many AI tools neither teach users how to write cues nor that the dataset is centered on white people.

Any technology has the potential for mistakes and room for improvement, but may not always have a solution. When AI isn’t smart enough, the first people who can reflect and improve are humans themselves.