At 10pm last night, the seal of secrecy on the Apple Vision Pro was officially lifted.

It was a sleepless night, as we watched review videos from almost every media outlet in the world to summarize what we saw in this review for you. There’s a lot of praise, and there’s also a lot of heartfelt vitriol.

Next, I’m going to take a look at the Vision Pro in the following five areas:

-

Design and wearability

-

Screen display effect

-

Interactive experience

-

Software Adaptation

-

Slot Summary

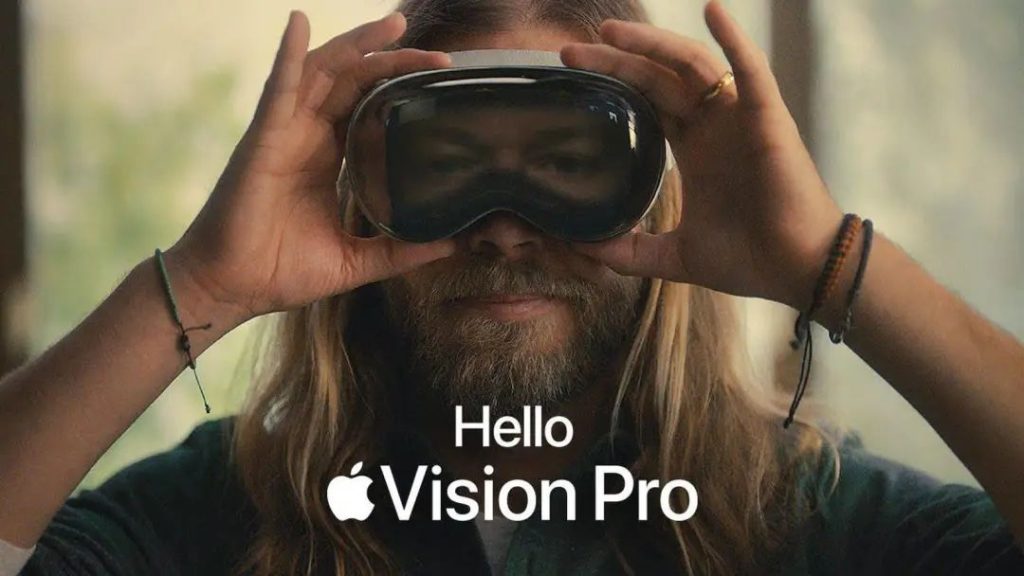

The Vision Pro on your head, and it’s a head-turner.

The Vision Pro isn’t big, even smaller than any VR device we’ve known in the past, even though it looks big in the official promotional images.

Judging from a wearable demo by tech media outlet The Verge, the Vision Pro didn’t stand out and fit the face so well (which may have had something to do with the presenter’s size) that it looked more like a pair of ski goggles than a headset device in videos by foreign media outlets such as Marques Brownlee, iJustine, and the Wall Street Journal.

Compared to the slew of plastic VR headsets on the market today, the Vision Pro’s materials feel like a natural extension of Apple’s previous design language – it’s made of magnesium and carbon fiber, and has an aluminum casing – in other words, it’s very Apple-esque.

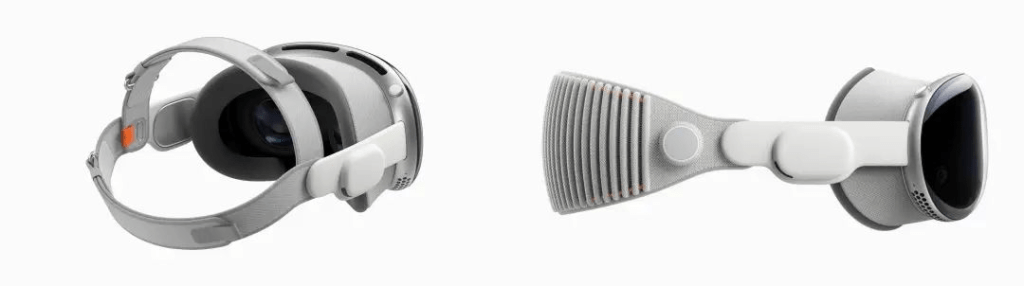

Before the ‘head’ is put on, the highlight is the physical components surrounding the device, which is, without exaggeration, a collection of Apple products: the headband looks like AirPods Max, and the knobs are large Apple Watch crowns.

Tech blogger Marques Brownlee shows off the details of the Vision Pro packaging, which includes two styles of headbands.

The braided band is the one we’ve seen most often in promotional images, a wide headband that wraps around the back of your head; the dual-loop band can be adjusted to fit different head shapes by adjusting the length and tightness of the two straps.

Multiple reviewers agree that the double loop band is quite a bit more comfortable than the single loop, even if it can weigh your hair down worse.

Additionally, Brownlee received an exclusive organizer for the Vision Pro, but note that it doesn’t come standard and costs $199 to purchase separately, and has the texture of a large, soft loaf of bread.

The Vision Pro’s custom Zeiss lenses, which come individually in a small box, are mounted magnetically. However, according to feedback from The Verge, using it with contact lenses doesn’t affect the display at all.

Digital knobs on the top of the device can be used to adjust the volume and level of immersion, and The Verge demonstrated two levels of immersive display in its review, with a semi-see-through mode that allows you to see both the display and the real environment around the screen.

On the other side, symmetrical to the knob, are the exclusive buttons for taking space videos and photos. According to the verge’s tests, the clarity of taking photos and recording videos, is nowhere near as good as your iPhone.

After a while, the Vision Pro will get hot like any other device, but according to The Verge’s hands-on experience, it doesn’t, thanks to the device’s built-in cooling fan. To its credit, the Vision Pro’s fan doesn’t make a sound or vibrate when it’s working, and you can’t even feel it.

There’s a kind of realism that’s called an ultra 4K display

During Vision Pro’s pre-service training, Apple explicitly asked its employees to refrain from using the word “VR” during demonstrations and promotions to users.

But The Verge clearly characterized the Vision Pro in its review:

“It’s a VR headset.” In short, it’s still a VR headset.

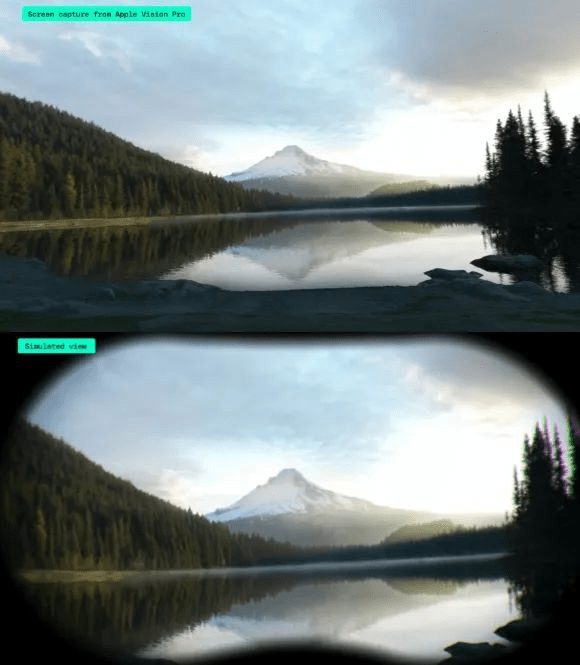

The reason it’s VR is simple: Vision Pro is not an OST (optical perspective only) solution – the environment we see in Vision Pro is a regenerated image captured by the camera and displayed by the optical machine, not the environment itself.

But Vision Pro can almost fool your eyes into maximizing reality, thanks to Vision Pro’s rich set of perceptual components.

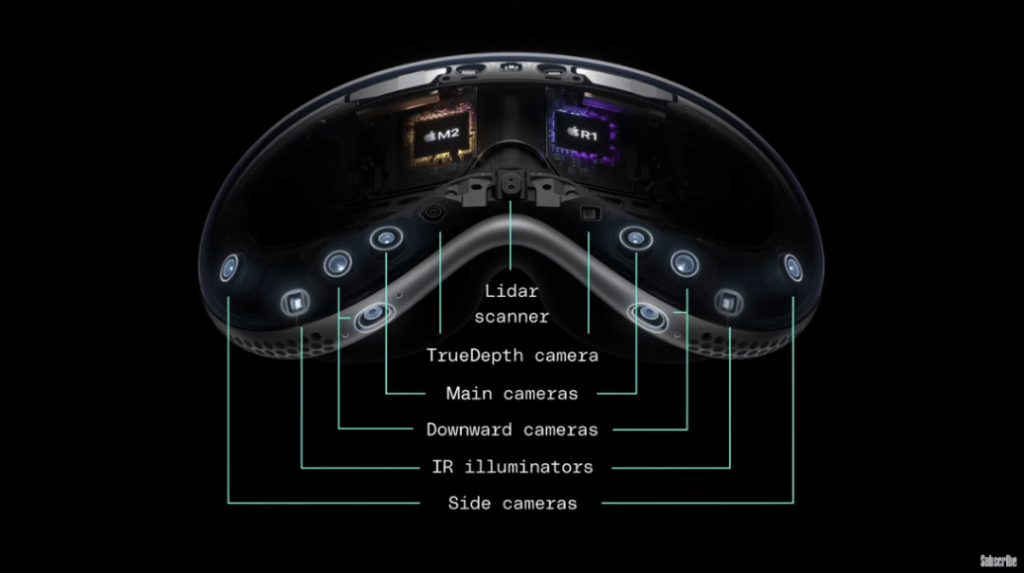

Apple uses a combination of 12 cameras (2 RGB, 4 lower view, 2 outer view, 4 inner IR) + 5 sensors (including a dToF sensor) to act as the human eye to make the outside world look as real as possible.

Another contributor to the illusion is the device’s MicroOLED display, which has a total of 23 megapixels and measures just 7.5 microns, about the size of a red blood cell.

The top of the line configuration allows the Vision Pro to do realistic “video pass-through,” or the ability to recreate real scenes, and The Verge recognizes the Vision Pro’s video pass-through, which they feel is at the heart of Apple’s headset experience.

The Vision Pro’s video passthrough latency is so low that there’s only a 12 millisecond gap between the environment in which it’s captured and the image on display, and that includes the camera’s own exposure time, so the actual data is processed much faster.

This responsiveness is less than a frame’s worth of latency, and the next frame is ready before you’ve finished watching the previous one, and The Verge proved the 12 milliseconds to be true in real-world testing, swiping their phones with the Vision Pro without screen distortion or jumpy displays.

However, the Vision Pro’s display of the outside world varies depending on how bright the light is. In low light, there’s more noise, which is a common problem with all VR glasses.

Spatial operating system – using the body as a remote control

At present, the interaction methods of smart wearable devices are broadly categorized into three:

-

Physical buttons that come with the surface of the device, which are operated by sliding, pressing, rotating and so on.

-

External remote control devices, cell phones, handles, rings, etc. act as input devices.

-

Operation by gesture, voice, etc.

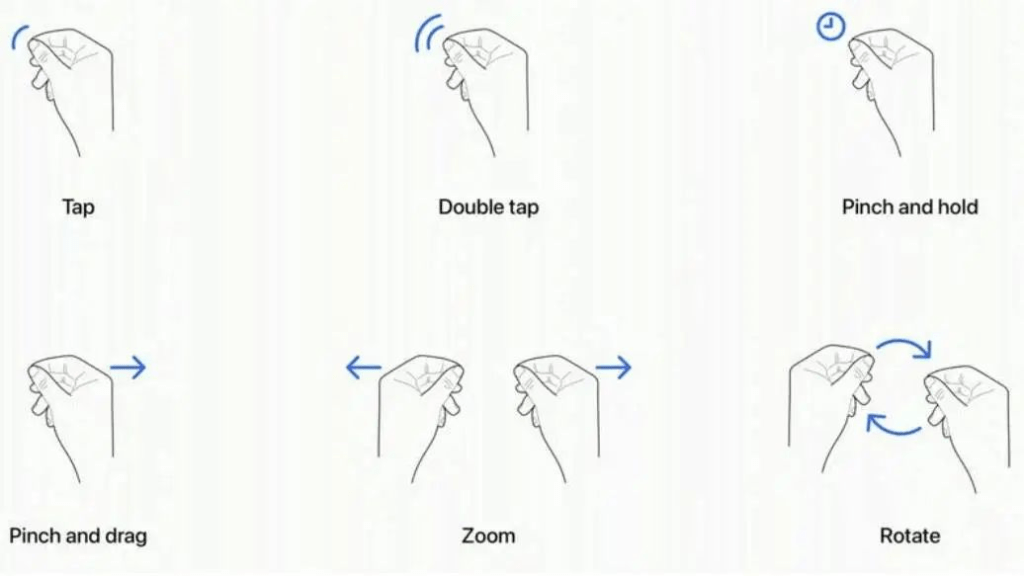

The interaction of Apple Vision Pro belongs to the third type, which requires the interplay of hands, eyes, and mouth, and is carried out very thoroughly: the two eyes act as cursor positioning (mouse cursor), the finger is responsible for determining (right mouse button), and the voice acts as the input method (keyboard).

The Verge commented

“This goes beyond any hand-eye tracking system in the mass market.”

Apple calls this system ‘Optic ID’, and Vision Pro triggers interactions as soon as it sees your eyes.

Optic ID lets users simply look at the target they want to control, and then use the appropriate gesture to do whatever they want with the interface on display, a feature that The Verge felt “superpowered” when it first experienced it.

The Wall Street Journal also made the practical effect of “Cyber Cooking” through the arbitrary placement of the timer in the space, so for those who love to cook and always forget things, the Vision Pro has to be useful.

Moreover, the Vision Pro’s external camera has a very wide recognition area, and the Vision Pro can capture hand commands almost anywhere on the front half of the body.

While other headsets generally require you to place your hand in front of the screen in order to manipulate the information on the screen, the Vision Pro allows you to issue hand commands in the most natural position, even if you place your hand on your leg and look ahead, you can easily realize Optic ID interaction.

Software Adaptation – An Egg and a Minefield

The degree of software adaptation for visionOS is one of the challenges for Vision Pro to reach the mass market, and after Vision Pro went on pre-order on January 19th, the biggest news was that Netflix, YouTube, and Spotify Technology SA were not going to release apps for Vision Pro.

Although there are currently only about 250 apps that have been designed for VisionOS, Apple can still make the Vision Pro valuable through hardware and software linkages within the ecosystem.

Vision Pro gives the Mac a huge 4K virtual display, and the Mac’s keyboard and trackpad can be used directly as input to Vision Pro.

The Mac keyboard and trackpad can be used directly as input to the Vision Pro. Linking with the Mac is a good use case for the Vision Pro’s VR capabilities.

The Vision Pro sees the Macbook in front of it through a “video feed” and displays a “link” option above it. Clicking on it enables a one-button casting, where the computer’s interface is panned directly into the headset’s screen.

Note, however, that while the screen can stretch up to 50 inches, it’s just “one” display, and it can’t be “physically split” in the same way that you can connect multiple displays to a Mac in a real space.

On the other hand, you can continue to use applications in Vision Pro alongside your Mac’s mapped window when it’s connected to a Mac.

With Continuous Interoperability, you can use your Mac’s keyboard and mouse to control Vision Pro.

When typing on the Bluetooth keyboard, a text preview window appears on the headset’s screen above your hand so you can see what you’re typing. This is the first time true AR computing has been available on a mainstream device, and the line between physical and virtual reality has been broken down in these small ways.

Using professional editing software like Lightroom on a connected Mac is when Vision Pro looks most like a productivity tool, as tested down by The Verge.

Linkage with iPhone

The linkage with the iPhone 15 Pro Max is what the verge calls the “best feature” of the Vision Pro: the ability to shoot spatial video on your phone and watch it on the Vision Pro, with the iPhone currently capable of shooting the corresponding video at 1080p 30fps or full 4K resolution.

While there’s not a lot of AR functionality, the Vision Pro integrates quite a few MR/VR features, especially in the immersive viewing mode, where you can even choose where you want to be, and the imaging is very realistic, with colors in the screen reflecting in the surroundings.

The Verge even feels that this will be the biggest and best TV many people have ever owned, and in many of its features, unmatched by traditional TVs as well.

Overall, visionOS is designed for eye tracking and is based on the iPadOS.

But there are significant differences between visionOS and iPadOS. visionOS can run all three systems at once, including iPadOS and MacOS, and if it displays too many windows, it can close them with Siri and a double-tap on the knob, just like a Macbook’s three-finger swipe to return to the desktop.

The Verge calls it “the most comprehensive window management system yet.

Now, on to the trolling.

Very expensive – both expensive and heavy.

The weight of the device alone reaches 600-650 grams, plus the weight of the headband and shade pads, almost catching up with the 12.9-inch iPad Pro (682 grams), although other brands of headsets will be heavier (such as 722 grams of Quest Pro), but the unibody design, the battery instead of the balance of the device weight of the part of the split Vision Pro seem to solve the problem of the Split Vision Pro seems to solve the weight of the battery, but the center of gravity of the whole machine is shifted forward, and Apple headset is not so suitable for long time wearing.

As a result, there are no real VR games or fitness software for the Vision Pro, and few people will want to move around with a tablet on their face.

Limited field of view

The Vision Pro doesn’t have a field of view like the human eye, it’s more like looking at the world through binoculars. It’s more like looking at the world through binoculars, with colored edges around the lens, where the resolution is mediocre, so the field of view is further compressed.

The somewhat creepy EyeSight

EyeSight isn’t as good as advertised – it’s a somewhat dark, reflective, low-resolution OLED screen that makes it difficult to see what’s displayed on it when the ambient light is a little brighter.

Simply put, the display isn’t as dynamic as Apple’s promotional images.

Eyesight is the window to communication, and the Vision Pro’s claim to “break the communication barrier” doesn’t actually get past the isolation of VR, with The Verge repeatedly describing the experience of using the Vision Pro as “in there”. There is no real eye contact between the user and the person they are communicating with, and the display itself is a bit “creepy”. 4.

Eye tracking is a bit tiring

While eye tracking works well, it also has a few problems: it’s not blind. It forces you to look at the part you’re trying to manipulate, which is the opposite of what we’re used to when we use input devices on our phones and computers, and it’s even distracting because very few people pay attention to the position of the mouse, which you’ll have to do on the Vision Pro.

Face modeling – the brutal buyer’s show

Before you can make a video call on Facetime, you need to model yourself.

In a review, the Wall Street Journal shows the entire process of face modeling:

The Wall Street Journal’s review shows the process of modeling a person’s face: Face the Vision Pro at yourself, follow the voice prompts to change your head position and facial expressions, and the device creates a 3D face of the user in about a minute.

However, the generated Cyber image is rather stiff and looks like a wax figure. A Wall Street Journal host used the Vision Pro to video call a friend, only to be tweeted that it ‘looked like too much Botox’.

Hair Killer – Headbands

Regardless of the type of headband, it will mess up your hair, and a single-loop braided band is much better compared to a double-loop band.

Notorious Speakers

The Vision Pro’s loud external speakers are a plus, but too loud is a minus – unless you have headphones, people around you can definitely hear what you’re doing.

First-timer bloggers have also given the Vision Pro a few concluding comments, and we’ve picked three of the more pertinent ones:

Wall Street Journal:

The Vision Pro currently offers a great way to work and watch movies, so is it worth $3,500? It depends on your wallet. But in the 24 hours I’ve been using it, I can see Apple’s future vision here.

iJustine:

The only problem is that a lot of the footage I’ve shown you guys isn’t as good as what the Vision Pro actually shows, it actually looks so sharp and everything is so real.You have to try it out, and if you’re not going to buy one, then find a (bought) friend to experience it in guest mode.There are a lot of other brands out there, not just Apple, that I think will move towards spatial computing.

The Verge:

Since I first put on my Vision Pro, these are the main questions that have sprung up in my mind:

This is a summary of what we’ve seen in the world’s first test of Vision Pro, and we’ve finally been able to get a glimpse of what “spatial computing” looks like from the perspective of one of the first users to get their hands on it. But since we haven’t experienced it for ourselves yet, we can only say that we can only quench our thirst, and more details are still unknown.

Soon, however, Avanelle will bring you a real and interesting review of the Vision Pro, because the one we bought will arrive on Friday 😉!